Achieving A Completely Open Source Implementation of Apple Code Signing and Notarization

August 08, 2022 at 08:08 AM | categories: Apple, RustAs I've previously blogged in

Pure Rust Implementation of Apple Code Signing

(2021-04-14) and

Expanding Apple Ecosystem Access with Open Source, Multi Platform Code signing

(2022-04-25), I've been hacking on an open source implementation of Apple code

signing and notarization using the Rust programming language. This takes the form

of the apple-codesign crate / library and its rcodesign CLI executable.

(Documentation /

GitHub project /

crates.io).

As of that most recent post in April, I was pretty happy with the relative

stability of the implementation: we were able to sign, notarize, and staple

Mach-O binaries, directory bundles (.app, .framework bundles, etc), XAR

archives / flat packages / .pkg installers, and DMG disk images. Except for

the known limitations,

if Apple's official codesign and notarytool tools support it, so do we.

This allows people to sign, notarize, and release Apple software from non-Apple

operating systems like Linux and Windows. This opens up new avenues for

Apple platform access.

A major limitation in previous versions of the apple-codesign crate was our

reliance on Apple's Transporter

tool for notarization. Transporter is a Java application made available for macOS,

Linux, and Windows that speaks to Apple's servers and can upload assets to their

notarization service. I used this tool at the time because it seemed to

be officially supported by Apple and the path of least resistance to standing

up notarization. But Transporter was a bit wonky to use and an extra

dependency that you needed to install.

At WWDC 2022, Apple announced

a new Notary API as

part of the App Store Connect API. In what felt like a wink directly at me,

Apple themselves even calls out the possibility for leveraging this API to

notarize from Linux! I knew as soon as I saw this that it was only a matter

of time before I would be able to replace Transporter with a pure Rust client

for the new HTTP API. (I was already thinking about using the unpublished HTTP

API that notarytool uses. And from the limited reversing notes I have from

before WWDC it looks like the new official Notary API is very similar - possibly

identical to - what notarytool uses. So kudos to Apple for opening up this

access!)

I'm very excited to announce that we now have a pure Rust implementation

of a client for Apple's Notary API in the apple-codesign crate. This means we

can now notarize Apple software from any machine where you can get the Rust

crate to compile. This means we no longer have a dependency on the 3rd party

Apple Transporter application. Notarization, like code signing, is 100% open

source Rust code.

As excited as I am to announce this new feature, I'm even more excited that it was largely implemented by a contributor, Robin Lambertz / @roblabla! They filed a GitHub feature request while WWDC 2022 was still ongoing and then submitted a PR a few days later. It took me a few months to get around to reviewing it (I try to avoid computer screens during summers), but it was a fantastic PR given the scope of the change. It never ceases to bring joy to me when someone randomly contributes greatness to open source.

So, as of the just-released 0.17 release

of the apple-codesign Rust crate and its corresponding rcodesign CLI tool, you can now

rcodesign notary-submit to speak to Apple's Notary API using a pure Rust client. No

more requirements on 3rd party, proprietary software. All you need to sign and

notarize Apple applications is the self-contained rcodesign executable and a Linux,

Windows, macOS, BSD, etc machine to run it on.

I'm stoked to finally achieve this milestone! There are probably thousands of companies and individuals who have wanted to release Apple software from non-macOS operating systems. (The existence and popularity of tools like fastlane seems to confirm this.) The historical lack of an Apple code signing and notarization solution that worked outside macOS has prevented this. Well, that barrier has officially fallen.

Release notes, documentation, and (self-signed) pre-built executables of the

rcodesign executable for major platforms are available on the

0.17 release page.

Expanding Apple Ecosystem Access with Open Source, Multi Platform Code Signing

April 25, 2022 at 08:00 AM | categories: Apple, RustA little over one year ago, I announced a project to implement Apple code signing in pure Rust. There have been quite a number of developments since that post and I thought a blog post was in order. So here we are!

But first, some background on why we're here.

Background

(Skip this section if you just want to get to the technical bits.)

Apple runs some of the largest and most profitable software application ecosystems in existence. Gaining access to these ecosystems has traditionally required the use of macOS and membership in the Apple Developer Program.

For the most part this makes sense: if you want to develop applications for Apple operating systems you will likely utilize Apple's operating systems and Apple's official tooling for development and distribution. Sticking to the paved road is a good default!

But many people want more... flexibility. Open source developers, for example, often want to distribute cross-platform applications with minimal effort. There are entire programming language ecosystems where the operating system you are running on is abstracted away as an implementation detail for many applications. By creating a de facto requirement that macOS, iOS, etc development require the direct access to macOS and (often above market priced) Apple hardware, the distribution requirements imposed by Apple's software ecosystems are effectively exclusionary and prevent interested parties from contributing to the ecosystem.

One of the aspects of software distribution on Apple platforms that trips a lot of people up is code signing and notarization. Essentially, you need to:

- Embed a cryptographic signature in applications that effectively attests to its authenticity from an Apple Developer Program associated account. (This is signing.)

- Upload your application to Apple so they can inspect it, verify it meets requirements, likely store a copy of it. Apple then issues their own cryptographic signature called a notarization ticket which then needs to be stapled/attached to the application being distributed so Apple operating systems can trust it. (This is notarization.)

Historically, these steps required Apple proprietary software run exclusively from macOS. This means that even if you are in a software ecosystem like Rust, Go, or the web platform where you can cross-compile apps without direct access to macOS (testing is obviously a different story), you would still need macOS somewhere if you wanted to sign and notarize your application. And signing and notarization is effectively required on macOS due to default security settings. On mobile platforms like iOS, it is impossible to distribute applications that aren't signed and notarized unless you are running a jailbreaked device.

A lot of people (myself included) have grumbled at these requirements. Why should I be forced to involve an Apple machine as part of my software release process if I don't need macOS to build my application? Why do I have to go through a convoluted dance to sign and notarize my application at release time - can't it be more streamlined?

When I looked at this space last year, I saw some obvious inefficiencies and room to improve. So as I said then, I foolishly set out to reimplement Apple code signing so developers would have more flexibility and opportunity for distributing applications to Apple's ecosystems.

The ultimate goal of this work is to expand Apple ecosystem access to more developers. A year later, I believe I'm delivering a product capable of doing this.

One Year Later

Foremost, I'm excited to announce release of

rcodesign 0.14.0.

This is the first time I'm publishing pre-built binaries (Linux, Windows, and macOS)

of rcodesign. This reflects my confidence in the relative maturity of the

software.

In case you are wondering, yes, the macOS rcodesign executable is self-signed:

it was signed by a GitHub Actions Linux runner using a code signing certificate

exclusive to a YubiKey. That YubiKey was plugged into a Windows 11 desktop next to

my desk. The rcodesign executable was not copied between machines as part of the

signing operation. Read on to learn about the sorcery that made this possible.

A lot has changed in the apple-codesign project / Rust crate in the last year! Just look at the changelog!

The project was renamed from tugger-apple-codesign.

(If you installed via cargo install, you'll need to

cargo install --force apple-codesign to force Cargo to overwrite the rcodesign

executable with one from a different crate.)

The rcodesign CLI executable is still there and more powerful than ever.

You can still sign Apple applications from Linux, Windows, macOS, and any other

platform you can get the Rust program to compile on.

There is now Sphinx documentation for the project. This is published on readthedocs.io alongside PyOxidizer's documentation (because I'm using a monorepo). There's some general documentation in there, such as a guide on how to selectively bypass Gatekeeper by deploying your own alternative code signing PKI to parallel Apple's. (This seems like something many companies would want but for whatever reason I'm not aware of anyone doing this - possibly because very few people understand how these systems work.)

There are bug fixes galore. When I look back at the state of rcodesign

when I first blogged about it, I think of how naive I was. There were a myriad

of applications that wouldn't pass notarization because of a long tail of bugs.

There are still known issues. But I believe many applications will

successfully sign and notarize now. I consider failures novel and worthy of

bug reports - so please report them!

Read on to learn about some of the notable improvements in the past year (many of them occurring in the last two months).

Support for Signing Bundles, DMGs, and .pkg Installers

When I announced this project last year, only Mach-O binaries and trivially

simple .app bundles were signable. And even then there were a ton of subtle

issues.

rcodesign sign can now sign more complex bundles, including many nested

bundles. There are reports of iOS app bundles signing correctly! (However, we

don't yet have good end-user documentation for signing iOS apps. I will gladly

accept PRs to improve the documentation!)

The tool also gained support for signing .dmg disk image files and .pkg

flat package installers.

Known limitations with signing are now documented in the Sphinx docs.

I believe rcodesign now supports signing all the major file formats used

for Apple software distribution. If you find something that doesn't sign

and it isn't documented as a known issue with an existing GitHub issue tracking

it, please report it!

Support for Notarization on Linux, Windows, and macOS

Apple publishes a Java tool named Transporter that enables you to upload artifacts to Apple for notarization. They make this tool available for Linux, Windows, and of course macOS.

While this tool isn't open source (as far as I know), usage of this tool enables you to notarize from Linux and Windows while still using Apple's official tooling for communicating with their servers.

rcodesign now has support for invoking Transporter and uploading artifacts

to Apple for notarization. We now support notarizing bundles, .dmg disk

images, and .pkg flat installer packages. I've successfully notarized all

of these application types from Linux.

(I'm capable of implementing

an alternative uploader in pure Rust but without assurances that Apple won't

bring down the ban hammer for violating terms of use, this is a bridge I'm

not yet willing to cross. The requirement to use Transporter is literally the

only thing standing in the way of making rcodesign an all-in-one single

file executable tool for signing and notarizing Apple software and I really

wish I could deliver this user experience win without reprisal.)

With support for both signing and notarizing all application types, it is now possible to release Apple software without macOS involved in your release process.

YubiKey Integration

I try to use my YubiKeys as much as possible because a secret or private key stored on a YubiKey is likely more secure than a secret or private key sitting around on a filesystem somewhere. If you hack my machine, you can likely gain access to my private keys. But you will need physical access to my YubiKey and to compel or coerce me into unlocking it in order to gain access to its private keys.

rcodesign now has support for using YubiKeys for signing operations.

This does require an off-by-default smartcard Cargo feature. So if

building manually you'll need to e.g.

cargo install --features smartcard apple-codesign.

The YubiKey integration comes courtesy of the amazing

yubikey Rust crate. This crate will speak

directly to the smartcard APIs built into macOS and Windows. So if you have an

rcodesign build with YubiKey support enabled, YubiKeys should

just work. Try it by plugging in your YubiKey and running

rcodesign smartcard-scan.

YubiKey integration has its own documentation.

I even implemented some commands to make it easy to manage the code signing

certificates on your YubiKey. For example, you can run

rcodesign smartcard-generate-key --smartcard-slot 9c to generate a new private

key directly on the device and then

rcodesign generate-certificate-signing-request --smartcard-slot 9c --csr-pem-path csr.pem

to export that certificate to a Certificate Signing Request (CSR), which you can

exchange for an Applie-issued signing certificate at developer.apple.com. This

means you can easily create code signing certificates whose private key was

generated directly on the hardware device and can never be exported.

Generating keys this way is widely considered to be more secure than storing

keys in software vaults, like Apple's Keychains.

Remote Code Signing

The feature I'm most excited about is what I'm calling remote code signing.

Remote code signing allows you to delegate the low-level cryptographic signature operations in code signing to a separate machine.

It's probably easiest to just demonstrate what it can do.

Earlier today I signed a macOS universal Mach-O executable from a GitHub-hosted Linux GitHub Actions runner using a YubiKey physically attached to the Windows 11 machine next to my desk at home. The signed application was not copied between machines.

Here's how I did it.

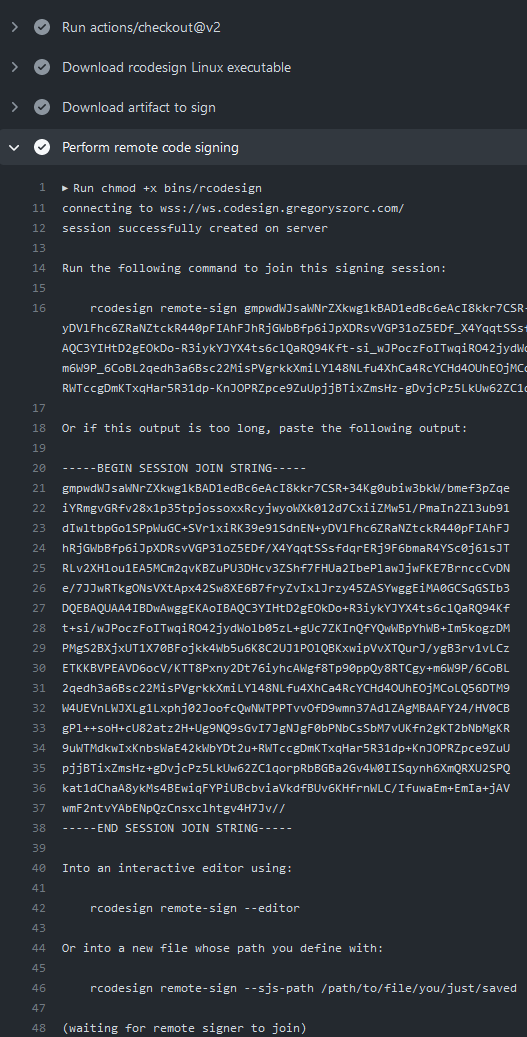

I have a GitHub Actions workflow that calls rcodesign sign --remote-signer.

I manually triggered that workflow and started watching the near real time

job output with my browser. Here's a screenshot of the job logs:

rcodesign sign --remote-signer prints out some instructions (including a

wall of base64 encoded data) for what to do next. Importantly, it requests that

someone else run rcodesign remote-sign to continue the signing process.

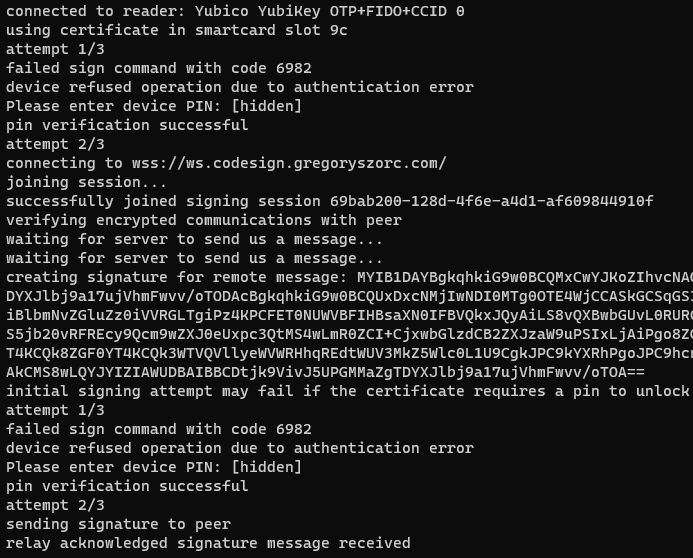

And here's a screenshot of me doing that from the Windows terminal:

This log shows us connecting and authenticating with the YubiKey along with some status updates regarding speaking to a remote server.

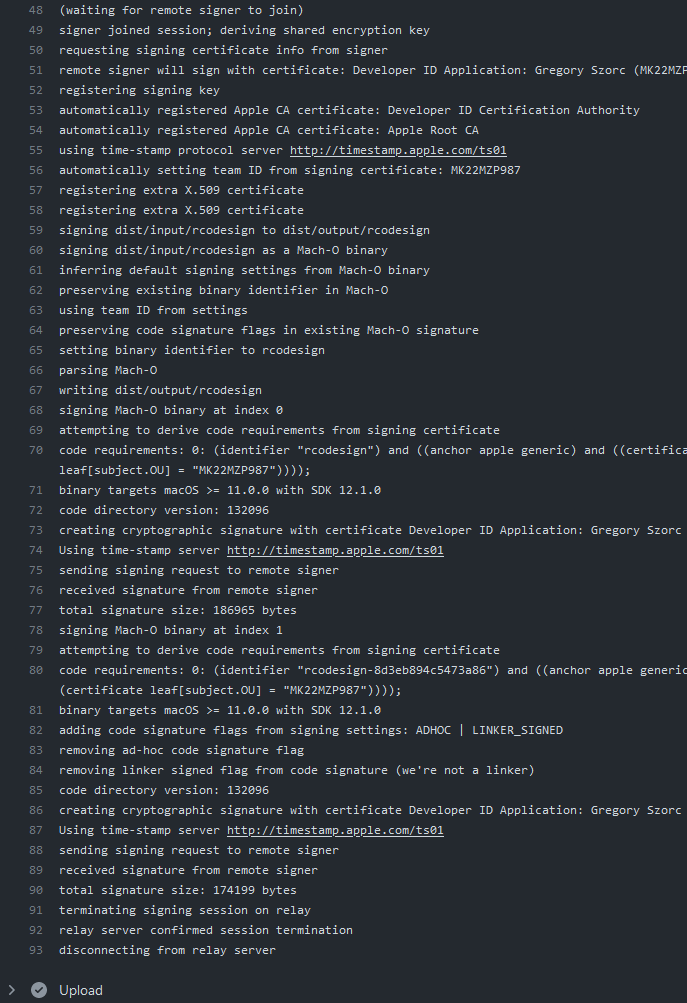

Finally, here's a screenshot of the GitHub Actions job output after I ran that command on my Windows machine:

Remote signing enabled me to sign a macOS application from a GitHub Actions runner operated by GitHub while using a code signing certificate securely stored on my YubiKey plugged into a Windows machine hundreds of kilometers away from the GitHub Actions runner. Magic, right?

What's happening here is the 2 rcodesign processes are communicating

with each other via websockets bridged by a central relay server.

(I operate a

default server free of charge.

The server is open source and a Terraform module is available if you want

to run your own server with hopefully just a few minutes of effort.)

When the initiating machine wants to create a signature, it sends a

message back to the signer requesting a cryptographic signature. The

signature is then sent back to the initiator, who incorporates it.

I designed this feature with automated releases from CI systems (like GitHub Actions) in mind. I wanted a way where I could streamline the code signing and release process of applications without having to give a low trust machine in CI ~unlimited access to my private signing key. But the more I thought about it the more I realized there are likely many other scenarios where this could be useful. Have you ever emailed or Dropboxed an application for someone else to sign because you don't have an Apple issued code signing certificate? Now you have an alternative solution that doesn't require copying files around! As long as you can see the log output from the initiating machine or have that output communicated to you (say over a chat application or email), you can remotely sign files on another machine!

An Aside on the Security of Remote Signing

At this point, I'm confident the more security conscious among you have been grimacing for a few paragraphs now. Websockets through a central server operated by a 3rd party?! Giving remote machines access to perform code signing against arbitrary content?! Your fears and skepticism are 100% justified: I'd be thinking the same thing!

I fully recognize that a service that facilitates remote code signing makes for a very lucrative attack target! If abused, it could be used to coerce parties with valid code signing certificates to sign unwanted code, like malware. There are many, many, many wrong ways to implement such a feature. I pondered for hours about the threat modeling and how to make this feature as secure as possible.

Remote Code Signing Design and Security Considerations captures some of my high level design goals and security assessments. And Remote Code Signing Protocol goes into detail about the communications protocol, including the crypto (actual cryptography, not the fad) involved. The key takeaways are the protocol and server are designed such that a malicious server or man-in-the-middle can not forge signature requests. Signing sessions expire after a few minutes and 3rd parties (or the server) can't inject malicious messages that would result in unwanted signatures. There is an initial handshake to derive a session ephemeral shared encryption key and from there symmetric encryption keys are used so all meaningful messages between peers are end-to-end encrypted. About the worst a malicious server could do is conduct a denial of service. This is by design.

As I argue in Security Analysis in the Bigger Picture, I believe that my implementation of remote signing is more secure than many common practices because common practices today entail making copies of private keys and giving low trust machines (like CI workers) access to private keys. Or files are copied around without cryptographic chain-of-custody to prove against tampering. Yes, remote signing introduces a vector for remote access to use signing keys. But practiced as I intended, remote signing can eliminate the need to copy private keys or grant ~unlimited access to them. From a threat modeling perspective, I think the net restriction in key access makes remote signing more secure than the private key management practices by many today.

All that being said, the giant asterisk here is I implemented my own cryptosystem to achieve end-to-end message security. If there are bugs in the design or implementation, that cryptosystem could come crashing down, bringing defenses against message forgery with it. At that point, a malicious server or privileged network actor could potentially coerce someone into signing unwanted software. But this is likely the extent of the damage: an offline attack against the signing key should not be possible since signing requires presence and since the private key is never transmitted over the wire. Even without the end-to-end encryption, the system is arguably more secure than leaving your private key lingering around as an easily exfiltrated CI secret (or similar).

(I apologize to every cryptographer I worked with at Mozilla who beat into me the commandment that thou shall not roll their own crypto: I have sinned and I feel remorseful.)

Cryptography is hard. And I'm sure I made plenty of subtle mistakes. Issue #552 tracks getting an audit of this protocol and code performed. And the aforementioned protocol design docs call out some of the places where I question decisions I've made.

If you would be interested in doing a security review on this feature, please get in touch on issue #552 or send me an email. If there's one immediate outcome I'd like from this blog post it would be for some white hat^Hknight to show up and give me peace of mind about the cryptosystem implementation.

Until then, please assume the end-to-end encryption is completely flawed. Consider asking someone with security or cryptographer in their job title for their opinion on whether this feature is safe for you to use. Hopefully we'll get a security review done soon and this caveat can go away!

If you do want to use this feature, Remote Code Signing contains some usage documentation, including how to use it with GitHub Actions. (I could also use some help productionizing a reusable GitHub Action to make this more turnkey! Although I'm hesitant to do it before I know the cryptosystem is sound.)

That was a long introduction to remote code signing. But I couldn't in good faith present the feature without addressing the security aspect. Hopefully I didn't scare you away! Traditional / local signing should have no security concerns (beyond the willingness to run software written by somebody you probably don't know, of course).

Apple Keychain Support

As of today's 0.14 release we now have early support for signing with code signing certificates stored in Apple Keychains! If you created your Apple code signing certificates in Keychain Access or Xcode, this is probably where you code signing certificates live.

I held off implementing this for the longest time because I didn't perceive

there to be a benefit: if you are on macOS, just use Apple's official tooling.

But with rcodesign gaining support for remote code signing and some other

features that could make it a compelling replacement for Apple tooling on

all platforms, I figured we should provide the feature so we stop discouraging

people to export private keys from Keychains.

This integration is very young and there's still a lot that can be done, such as automatically using an appropriate signing certificate based on what you are signing. Please file feature request issues if there's a must-have feature you are missing!

Better Debugging of Failures

Apple's code signing is complex. It is easy for there to be subtle differences

between Apple's tooling and rcodesign.

rcodesign now has print-signature-info and diff-signatures commands to

dump and compare YAML metadata pertinent to code signing to make it easier to

compare behavior between code signing implementations and even multiple

signing operations.

The documentation around debugging and reporting bugs now emphasizes using these tools to help identify bugs.

A Request For Users and Feedback

I now believe rcodesign to be generally usable. I've thrown a lot of

random software at it and I feel like most of the big bugs and major missing

features are behind us.

But I also feel it hasn't yet received wide enough attention to have confidence in that assessment.

If you want to help the development of this tool, the most important actions you can take are to attempt signing / notarization operations with it and report your results.

Does rcodesign spark joy? Please leave a comment in the

GitHub discussion for the latest release!

Does rcodesign not work? I would very much appreciate a bug report!

Details on how to file good bugs are

in the docs.

Have general feedback? UI is confusing? Documentation is insufficient? Leave a comment in the aforementioned discussion. Or create a GitHub issue if you think it is actionable. I can't fix what I don't know about!

Have private feedback? Send me an email.

Conclusion

I could write thousands of words about all I learned from hacking on this project.

I've learned way too much about too many standards and specifications in the

crypto space. RFCs 2986, 3161, 3280, 3281, 3447, 4210, 4519, 5280, 5480,

5652, 5869, 5915, 5958, and 8017 plus probably a few more. How cryptographic

primitives are stored and expressed: ASN.1, OIDs, BER, DER, PEM, SPKI,

PKCS#1, PKCS#8. You can show me the raw parse tree for an ASN.1 data structure

and I can probably tell you what RFC defines it. I'm not proud of this. But

I will say actually knowing what every field in an X.509 certificate does

or the many formats that cryptographic keys are expressed in seems empowering.

Before, I would just search for the openssl incantation to do something.

Now, I know which ASN.1 data structures are involved and how to manipulate

the fields within.

I've learned way too much around minutia around how Apple code signing actually works. The mechanism is way too complex for something in the security space. There was at least one high profile Gatekeeper bug in the past year allowing improperly signed code to run. I suspect there will be more: the surface area to exploit is just too large.

I think I'm proud of building an open source implementation of Apple's code signing. To my knowledge nobody else has done this outside of Apple. At least not to the degree I have. Then factor in that I was able to do this without access (or willingness) to look at Apple source code and much of the progress was achieved by diffing and comparing results with Apple's tooling. Hours of staring at diffoscope and comparing binary data structures. Hours of trying to find the magical settings that enabled a SHA-1 or SHA-256 digest to agree. It was tedious work for sure. I'll likely never see a financial return on the time equivalent it took me to develop this software. But, I suppose I can nerd brag that I was able to implement this!

But the real reward for this work will be if it opens up avenues to more (open source) projects distributing to the Apple ecosystems. This has historically been challenging for multiple reasons and many open source projects have avoided official / proper distribution channels to avoid the pain (or in some cases because of philosophical disagreements with the premise of having a walled software garden in the first place). I suspect things will only get worse, as I feel it is inevitable Apple clamps down on signing and notarization requirements on macOS due to the rising costs of malware and ransomware. So having an alternative, open source, and multi-platform implementation of Apple code signing seems like something important that should exist in order to provide opportunities to otherwise excluded developers. I would be humbled if my work empowers others. And this is all the reward I need.

Pure Rust Implementation of Apple Code Signing

April 14, 2021 at 01:45 PM | categories: PyOxidizer, Apple, RustA few weeks ago I (foolishly?) set out to implement Apple code signing

(what Apple's codesign tool does) in pure Rust.

I wanted to quickly announce on this blog the existence of the project and

the news that as of a few minutes ago, the tugger-apple-codesign crate

implementing the code signing functionality is now

published on crates.io!

So, you can now sign Apple binaries and bundles on non-Apple hardware by doing something like this:

$ cargo install tugger-apple-codesign

$ rcodesign sign /path/to/input /path/to/output

Current features include:

- Robust support for parsing embedded signatures and most related data

structures.

rcodesign extractcan be used to extract various signature data in raw or human readable form. - Parse and verify RFC 5652 Cryptographic Message Syntax (CMS) signature data.

- Sign binaries. If a code signing certificate key pair is provided, a CMS signature will be created. This includes support for Time-Stamp Protocol (TSP) / RFC 3161 tokens. If no key pair is provided, you get an ad-hoc signature.

- Signing bundles. Nested bundles and binaries will automatically be signed.

Non-code resources will be digested and a

CodeResourcesXML file will be produced.

The most notable missing features are:

- No support for obtaining signing keys from keychains. If you want to sign with a cryptographic key pair, you'll need to point the tool at a PEM encoded key pair and CA chain.

- No support for parsing the Code Signing Requirements language. We can parse the

binary encoding produced by

csreq -band convert it back to this DSL. But we don't parse the human friendly language. - No support for notarization.

All of these could likely be implemented. However, I am not actively working on any of these features. If you would like to contribute support, make noise in the GitHub issue tracker.

The Rust API, CLI, and documentation are still a bit rough around the edges. I

haven't performed thorough QA on aspects of the functionality. However, the

tool is able to produce signed binaries that Apple's canonical codesign tool

says are well-formed. So I'm reasonably confident some of the functionality

works as intended. If you find bugs or missing features, please

report them on GitHub. Or even

better: submit pull requests!

As part of this project, I also created and published the cryptographic-message-syntax crate, which is a pure Rust partial implementation of RFC 5652, which defines the cryptographic message signing mechanism. This RFC is a bit dated and seems to have been superseded by RPKI. So you may want to look elsewhere before inventing new signing mechanisms that use this format.

Finally, it appears the Windows code signing mechanism (Authenticode) also uses RFC 5652 (or a variant thereof) for cryptographic signatures. So by implementing Apple code signatures, I believe I've done most of the legwork to implement Windows/PE signing! I'll probably implement Windows signing in a new crate whenever I hook up automatic code signing to PyOxidizer, which was the impetus for this work (I want to make it possible to build distributable Apple programs without Apple hardware, using as many open source Rust components as possible).

Global Kernel Locks in APFS

October 29, 2018 at 02:20 PM | categories: Python, Mercurial, AppleOver the past several months, a handful of people had been complaining that Mercurial's test harness was executing much slower on Macs. But this slowdown seemingly wasn't occurring on Linux or Windows. And not every Mac user experienced the slowness!

Before jetting off to the Mercurial 4.8 developer meetup in Stockholm a few weeks ago, I sat down with a relatively fresh 6+6 core MacBook Pro and experienced the problem firsthand: on my 4+4 core i7-6700K running Linux, the Mercurial test harness completes in ~12 minutes, but on this MacBook Pro, it was executing in ~38 minutes! On paper, this result doesn't make any sense because there's no way that the MacBook Pro should be ~3x slower than that desktop machine.

Looking at Activity Monitor when running the test harness with 12 tests in parallel revealed something odd: the system was spending ~75% of overall CPU time inside the kernel! When reducing the number of tests that ran in parallel, the percentage of CPU time spent in the kernel decreased and the overall test harness execution time also decreased. This kind of behavior is usually a sign of something very inefficient in kernel land.

I sample profiled all processes on the system when running the Mercurial

test harness. Aggregate thread stacks revealed a common pattern:

readdir() being in the stack.

Upon closer examination of the stacks, readdir() calls into

apfs_vnop_readdir(), which calls into some functions with bt or

btree in their name, which call into lck_mtx_lock(),

lck_mtx_lock_grab_mutex() and various other functions with

lck_mtx in their name. And the caller of most readdir() appeared

to be Python 2.7's module importing mechanism (notably

import.c:case_ok()).

APFS refers to the Apple File System, which is a filesystem that Apple introduced in 2017 and is the default filesystem for new versions of macOS and iOS. If upgrading an old Mac to a new macOS, its HFS+ filesystems would be automatically converted to APFS.

While the source code for APFS is not available for me to confirm, the

profiling results showing excessive time spent in

lck_mtx_lock_grab_mutex() combined with the fact that execution time

decreases when the parallel process count decreases leads me to the

conclusion that APFS obtains a global kernel lock during read-only

operations such as readdir(). In other words, APFS slows down when

attempting to perform parallel read-only I/O.

This isn't the first time I've encountered such behavior in a filesystem: last year I blogged about very similar behavior in AUFS, which was making Firefox CI significantly slower.

Because Python 2.7's module importing mechanism was triggering the

slowness by calling readdir(), I

posted to python-dev

about the problem, as I thought it was important to notify the larger

Python community. After all, this is a generic problem that affects

the performance of starting any Python process when running on APFS.

i.e. if your build system invokes many Python processes in parallel,

you could be impacted by this. As part of obtaining data for that post, I

discovered that Python 3.7 does not call readdir() as part of

module importing and therefore doesn't exhibit a severe slowdown. (Python's

module importing code was rewritten significantly in Python 3 and the fix

was likely introduced well before Python 3.7.)

I've produced a gist that can reproduce the problem.

The script essentially performs a recursive directory walk. It exercises

the opendir(), readdir(), closedir(), and lstat() functions

heavily and is essentially a benchmark of the filesystem and filesystem

cache's ability to return file metadata.

When you tell it to walk a very large directory tree - say a Firefox version control checkout (which has over 250,000 files) - the excessive time spent in the kernel is very apparent on macOS 10.13 High Sierra:

$ time ./slow-readdir.py -l 12 ~/src/firefox

ran 12 walks across 12 processes in 172.209s

real 2m52.470s

user 1m54.053s

sys 23m42.808s

$ time ./slow-readdir.py -l 12 -j 1 ~/src/firefox

ran 12 walks across 1 processes in 523.440s

real 8m43.740s

user 1m13.397s

sys 3m50.687s

$ time ./slow-readdir.py -l 18 -j 18 ~/src/firefox

ran 18 walks across 18 processes in 210.487s

real 3m30.731s

user 2m40.216s

sys 33m34.406s

On the same machine upgraded to macOS 10.14 Mojave, we see a bit of a speedup!:

$ time ./slow-readdir.py -l 12 ~/src/firefox

ran 12 walks across 12 processes in 97.833s

real 1m37.981s

user 1m40.272s

sys 10m49.091s

$ time ./slow-readdir.py -l 12 -j 1 ~/src/firefox

ran 12 walks across 1 processes in 461.415s

real 7m41.657s

user 1m05.830s

sys 3m47.041s

$ time ./slow-readdir.py -l 18 -j 18 ~/src/firefox

ran 18 walks across 18 processes in 140.474s

real 2m20.727s

user 3m01.048s

sys 17m56.228s

Contrast with my i7-6700K Linux machine backed by EXT4:

$ time ./slow-readdir.py -l 8 ~/src/firefox

ran 8 walks across 8 processes in 6.018s

real 0m6.191s

user 0m29.670s

sys 0m17.838s

$ time ./slow-readdir.py -l 8 -j 1 ~/src/firefox

ran 8 walks across 1 processes in 33.958s

real 0m34.164s

user 0m17.136s

sys 0m13.369s

$ time ./slow-readdir.py -l 12 -j 12 ~/src/firefox

ran 12 walks across 12 processes in 25.465s

real 0m25.640s

user 1m4.801s

sys 1m20.488s

It is apparent that macOS 10.14 Mojave has received performance work relative to macOS 10.13! Overall kernel CPU time when performing parallel directory walks has decreased substantially - to ~50% of original on some invocations! Stacks seem to reveal new code for lock acquisition, so this might indicate generic improvements to the kernel's locking mechanism rather than APFS specific changes. Changes to file metadata caching could also be responsible for performance changes. Although it is difficult to tell without access to the APFS source code. Despite those improvements, APFS is still spending a lot of CPU time in the kernel. And the kernel CPU time is still comparatively very high compared to Linux/EXT4, even for single process operation.

At this time, I haven't conducted a comprehensive analysis of APFS to

determine what other filesystem operations seem to acquire global kernel

locks: all I know is readdir() does. A casual analysis of profiled

stacks when running Mercurial's test harness against Python 3.7 seems

to show apfs_* functions still on the stack a lot and that seemingly

indicates more APFS slowness under parallel I/O load. But HFS+ exhibited

similar problems (it appeared HFS+ used a single I/O thread inside the

kernel for many operations, making I/O on macOS pretty bad), so I'm

not sure if these could be considered regressions the way readdir()'s

new behavior is.

I've reported this issue to Apple at https://bugreport.apple.com/web/?problemID=45648013 and on OpenRadar at https://openradar.appspot.com/radar?id=5025294012383232. I'm told that issues get more attention from Apple when there are many duplicates of the same issue. So please reference this issue if you file your own report.

Now that I've elaborated on the technical details, I'd like to add some personal commentary. While this post is about APFS, this issue of global kernel locks during common I/O operations is not unique to APFS. I already referenced similar issues in AUFS. And I've encountered similar behaviors with Btrfs (although I can't recall exactly which operations). And NTFS has its own bag of problems.

This seeming pattern of global kernel locks for common filesystem operations and slow filesystems is really rubbing me the wrong way. Modern NVMe SSDs are capable of reading and writing well over 2 gigabytes per second and performing hundreds of thousands of I/O operations per second. We even have Intel soon producing persistent solid state storage that plugs into DIMM slots because it is that friggin fast.

Today's storage hardware is capable of ludicrous performance. It is fast enough that you will likely saturate multiple CPU cores processing the read or written data coming from and going to storage - especially if you are using higher-level, non-JITed (read: slower) programming languages (like Python). There has also been a trend that systems are growing more CPU cores faster than they are instructions per second per core. And SSDs only achieve these ridiculous IOPS numbers if many I/O operations are queued and can be more efficiently dispatched within the storage device. What this all means is that it probably makes sense to use parallel I/O across multiple threads in order to extract all potential performance from your persistent storage layer.

It's also worth noting that we now have solid state storage that outperforms (in some dimensions) what DRAM from ~20 years ago was capable of. Put another way I/O APIs and even some filesystems were designed in an era when its RAM was slower than what today's persistent storage is capable of! While I'm no filesystems or kernel expert, it does seem a bit silly to be using APIs and filesystems designed for an era when storage was multiple orders of magnitude slower and systems only had a single CPU core.

My takeaway is I can't help but feel that systems-level software (including the kernel) is severely limiting the performance potential of modern storage devices. If we have e.g. global kernel locks when performing common I/O operations, there's no chance we'll come close to harnessing the full potential of today's storage hardware. Furthermore, the behavior of filesystems is woefully under documented and software developers have little solid advice for how to achieve optimal I/O performance. As someone who cares about performance, I want to squeeze every iota of potential out of hardware. But the lack of documentation telling me which operations acquire locks, which strategies are best for say reading or writing 10,000 files using N threads, etc makes this extremely difficult. And even if this documentation existed, because of differences in behavior across filesystems and operating systems and the difficulty in programmatically determining the characteristics of filesystems at run time, it is practically impossible to design a one size fits all approach to high performance I/O.

The filesystem is a powerful concept. I want to agree and use the everything is a file philosophy. Unfortunately, filesystems don't appear to be scaling very well to support the potential of modern day storage technology. We're probably at the point where commodity priced solid state storage is far more capable than today's software for the majority of applications. Storage hardware manufacturers will keep producing faster and faster storage and their marketing teams will keep convincing us that we need to buy it. But until software catches up, chances are most of us won't come close to realizing the true potential of modern storage hardware. And that's even true for specialized applications that do employ tricks taking hundreds or thousands of person hours to implement in order to eek out every iota of performance potential. The average software developer and application using filesystems as they were designed to be used has little to no chance of coming close to utilizing the performance potential of modern storage devices. That's really a shame.