Benefits of Clone Offload on Version Control Hosting

July 27, 2018 at 03:48 PM | categories: Mercurial, MozillaBack in 2015, I implemented a feature in Mercurial 3.6 that allows

servers to advertise URLs of pre-generated bundle files. When a

compatible client performs a hg clone against a repository leveraging

this feature, it downloads and applies the bundle from a URL then goes

back to the server and performs the equivalent of an hg pull to obtain

the changes to the repository made after the bundle was generated.

On hg.mozilla.org, we've been using this feature since 2015. We host bundles in Amazon S3 and make them available via the CloudFront CDN. We perform IP filtering on the server so clients connecting from AWS IPs are served S3 URLs corresponding to the closest region / S3 bucket where bundles are hosted. Most Firefox build and test automation is run out of EC2 and automatically clones high-volume repositories from an S3 bucket hosted in the same AWS region. (Doing an intra-region transfer is very fast and clones can run at >50 MB/s.) Everyone else clones from a CDN. See our official docs for more.

I last reported on this feature in October 2015. Since then, Bitbucket also deployed this feature in early 2017.

I was reminded of this clone bundles feature this week when kernel.org posted Best way to do linux clones for your CI and that post was making the rounds in my version control circles. tl;dr git.kernel.org apparently suffers high load due to high clone volume against the Linux Git repository and since Git doesn't have an equivalent feature to clone bundles built in to Git itself, they are asking people to perform equivalent functionality to mitigate server load.

(A clone bundles feature has been discussed on the Git mailing list before. I remember finding old discussions when I was doing research for Mercurial's feature in 2015. I'm sure the topic has come up since.)

Anyway, I thought I'd provide an update on just how valuable the clone bundles feature is to Mozilla. In doing so, I hope maintainers of other version control tools see the obvious benefits and consider adopting the feature sooner.

In a typical week, hg.mozilla.org is currently serving ~135 TB of data. The overwhelming majority of this data is related to the Mercurial wire protocol (i.e. not HTML / JSON served from the web interface). Of that ~135 TB, ~5 TB is served from the CDN, ~126 TB is served from S3, and ~4 TB is served from the Mercurial servers themselves. In other words, we're offloading ~97% of bytes served from the Mercurial servers to S3 and the CDN.

If we assume this offloaded ~131 TB is equally distributed throughout the week, this comes out to ~1,732 Mbps on average. In reality, we do most of our load from California's Sunday evenings to early Friday evenings. And load is typically concentrated in the 12 hours when the sun is over Europe and North America (where most of Mozilla's employees are based). So the typical throughput we are offloading is more than 2 Gbps. And at a lower level, automation tends to perform clones soon after a push is made. So load fluctuates significantly throughout the day, corresponding to when pushes are made.

By volume, most of the data being offloaded is for the mozilla-unified Firefox repository. Without clone bundles and without the special stream clone Mercurial feature (which we also leverage via clone bundles), the servers would be generating and sending ~1,588 MB of zstandard level 3 compressed data for each clone of that repository. Each clone would consume ~280s of CPU time on the server. And at ~195,000 clones per month, that would come out to ~309 TB/mo or ~72 TB/week. In CPU time, that would be ~54.6 million CPU-seconds, or ~21 CPU-months. I will leave it as an exercise to the reader to attach a dollar cost to how much it would take to operate this service without clone bundles. But I will say the total AWS bill for our S3 and CDN hosting for this service is under $50 per month. (It is worth noting that intra-region data transfer from S3 to other AWS services is free. And we are definitely taking advantage of that.)

Despite a significant increase in the size of the Firefox repository and clone volume of it since 2015, our servers are still performing less work (in terms of bytes transferred and CPU seconds consumed) than they were in 2015. The ~97% of bytes and millions of CPU seconds offloaded in any given week have given us a lot of breathing room and have saved Mozilla several thousand dollars in hosting costs. The feature has likely helped us avoid many operational incidents due to high server load. It has made Firefox automation faster and more reliable.

Succinctly, Mercurial's clone bundles feature has successfully and largely effortlessly offloaded a ton of load from the hg.mozilla.org Mercurial servers. Other version control tools should implement this feature because it is a game changer for server operators and results in a better client-side experience (eliminates server-side CPU bottleneck and may eliminate network bottleneck due to a geo-local CDN typically being as fast as your Internet pipe). It's a win-win. And a massive win if you are operating at scale.

High-level Problems with Git and How to Fix Them

December 11, 2017 at 10:30 AM | categories: Git, Mercurial, MozillaI have a... complicated relationship with Git.

When Git first came onto the scene in the mid 2000's, I was initially skeptical because of its horrible user interface. But once I learned it, I appreciated its speed and features - especially the ease at which you could create feature branches, merge, and even create commits offline (which was a big deal in the era when Subversion was the dominant version control tool in open source and you needed to speak with a server in order to commit code). When I started using Git day-to-day, it was such an obvious improvement over what I was using before (mainly Subversion and even CVS).

When I started working for Mozilla in 2011, I was exposed to the Mercurial version control, which then - and still today - hosts the canonical repository for Firefox. I didn't like Mercurial initially. Actually, I despised it. I thought it was slow and its features lacking. And I frequently encountered repository corruption.

My first experience learning the internals of both Git and Mercurial came when I found myself hacking on hg-git - a tool that allows you to convert Git and Mercurial repositories to/from each other. I was hacking on hg-git so I could improve the performance of converting Mercurial repositories to Git repositories. And I was doing that because I wanted to use Git - not Mercurial - to hack on Firefox. I was trying to enable an unofficial Git mirror of the Firefox repository to synchronize faster so it would be more usable. The ulterior motive was to demonstrate that Git is a superior version control tool and that Firefox should switch its canonical version control tool from Mercurial to Git.

In what is a textbook definition of irony, what happened instead was I actually learned how Mercurial worked, interacted with the Mercurial Community, realized that Mozilla's documentation and developer practices were... lacking, and that Mercurial was actually a much, much more pleasant tool to use than Git. It's an old post, but I summarized my conversion four and a half years ago. This started a chain of events that somehow resulted in me contributing a ton of patches to Mercurial, taking stewardship of hg.mozilla.org, and becoming a member of the Mercurial Steering Committee - the governance group for the Mercurial Project.

I've been an advocate of Mercurial over the years. Some would probably say I'm a Mercurial fanboy. I reject that characterization because fanboy has connotations that imply I'm ignorant of realities. I'm well aware of Mercurial's faults and weaknesses. I'm well aware of Mercurial's relative lack of popularity, I'm well aware that this lack of popularity almost certainly turns away contributors to Firefox and other Mozilla projects because people don't want to have to learn a new tool. I'm well aware that there are changes underway to enable Git to scale to very large repositories and that these changes could threaten Mercurial's scalability advantages over Git, making choices to use Mercurial even harder to defend. (As an aside, the party most responsible for pushing Git to adopt architectural changes to enable it to scale these days is Microsoft. Could anyone have foreseen that?!)

I've achieved mastery in both Git and Mercurial. I know their internals and their command line interfaces extremely well. I understand the architecture and principles upon which both are built. I'm also exposed to some very experienced and knowledgeable people in the Mercurial Community. People who have been around version control for much, much longer than me and have knowledge of random version control tools you've probably never heard of. This knowledge and exposure allows me to make connections and see opportunities for version control that quite frankly most do not.

In this post, I'll be talking about some high-level, high-impact problems with Git and possible solutions for them. My primary goal of this post is to foster positive change in Git and the services around it. While I personally prefer Mercurial, improving Git is good for everyone. Put another way, I want my knowledge and perspective from being part of a version control community to be put to good wherever it can.

Speaking of Mercurial, as I said, I'm a heavy contributor and am somewhat influential in the Mercurial Community. I want to be clear that my opinions in this post are my own and I'm not speaking on behalf of the Mercurial Project or the larger Mercurial Community. I also don't intend to claim that Mercurial is holier-than-thou. Mercurial has tons of user interface failings and deficiencies. And I'll even admit to being frustrated that some systemic failings in Mercurial have gone unaddressed for as long as they have. But that's for another post. This post is about Git. Let's get started.

The Staging Area

The staging area is a feature that should not be enabled in the default Git configuration.

Most people see version control as an obstacle standing in the way of accomplishing some other task. They just want to save their progress towards some goal. In other words, they want version control to be a save file feature in their workflow.

Unfortunately, modern version control tools don't work that way. For starters, they require people to specify a commit message every time they save. This in of itself can be annoying. But we generally accept that as the price you pay for version control: that commit message has value to others (or even your future self). So you must record it.

Most people want the barrier to saving changes to be effortless. A commit message is already too annoying for many users! The Git staging area establishes a higher barrier to saving. Instead of just saving your changes, you must first stage your changes to be saved.

If you requested save in your favorite GUI application, text editor, etc and it popped open a select the changes you would like to save dialog, you would rightly think just save all my changes already, dammit. But this is exactly what Git does with its staging area! Git is saying I know all the changes you made: now tell me which changes you'd like to save. To the average user, this is infuriating because it works in contrast to how the save feature works in almost every other application.

There is a counterargument to be made here. You could say that the editor/application/etc is complex - that it has multiple contexts (files) - that each context is independent - and that the user should have full control over which contexts (files) - and even changes within those contexts - to save. I agree: this is a compelling feature. However, it isn't an appropriate default feature. The ability to pick which changes to save is a power-user feature. Most users just want to save all the changes all the time. So that should be the default behavior. And the Git staging area should be an opt-in feature.

If intrinsic workflow warts aren't enough, the Git staging area has a

horrible user interface. It is often referred to as the cache

for historical reasons.

Cache of course means something to anyone who knows anything about

computers or programming. And Git's use of cache doesn't at all align

with that common definition. Yet the the terminology in Git persists.

You have to run commands like git diff --cached to examine the state

of the staging area. Huh?!

But Git also refers to the staging area as the index. And this

terminology also appears in Git commands! git help commit has numerous

references to the index. Let's see what git help glossary has to say::

index

A collection of files with stat information, whose contents are

stored as objects. The index is a stored version of your working tree.

Truth be told, it can also contain a second, and even a third

version of a working tree, which are used when merging.

index entry

The information regarding a particular file, stored in the index.

An index entry can be unmerged, if a merge was started, but not

yet finished (i.e. if the index contains multiple versions of that

file).

In terms of end-user documentation, this is a train wreck. It tells the

lay user absolutely nothing about what the index actually is. Instead,

it casually throws out references to stat information (requires the user

know what the stat() function call and struct are) and objects (a Git

term for a piece of data stored by Git). It even undermines its own credibility

with that truth be told sentence. This definition is so bad that it

would probably improve user understanding if it were deleted!

Of course, git help index says No manual entry for gitindex. So

there is literally no hope for you to get a concise, understandable

definition of the index. Instead, it is one of those concepts that you

think you learn from interacting with it all the time. Oh, when I

git add something it gets into this state where git commit will

actually save it.

And even if you know what the Git staging area/index/cached is, it can

still confound you. Do you know the interaction between uncommitted

changes in the staging area and working directory when you git rebase?

What about git checkout? What about the various git reset invocations?

I have a confession: I can't remember all the edge cases either. To play

it safe, I try to make sure all my outstanding changes are committed

before I run something like git rebase because I know that will be

safe.

The Git staging area doesn't have to be this complicated. A re-branding

away from index to staging area would go a long way. Adding an alias

from git diff --staged to git diff --cached and removing references

to the cache from common user commands would make a lot of sense and

reduce end-user confusion.

Of course, the Git staging area doesn't really need to exist at all!

The staging area is essentially a soft commit. It performs the

save progress role - the basic requirement of a version control tool.

And in some aspects it is actually a better save progress implementation

than a commit because it doesn't require you to type a commit message!

Because the staging area is a soft commit, all workflows using it can

be modeled as if it were a real commit and the staging area didn't

exist at all! For example, instead of git add --interactive +

git commit, you can run git commit --interactive. Or if you wish

to incrementally add new changes to an in-progress commit, you can

run git commit --amend or git commit --amend --interactive or

git commit --amend --all. If you actually understand the various modes

of git reset, you can use those to uncommit. Of course, the user

interface to performing these actions in Git today is a bit convoluted.

But if the staging area didn't exist, new high-level commands like

git amend and git uncommit could certainly be invented.

To the average user, the staging area is a complicated concept. I'm a power user. I understand its purpose and how to harness its power. Yet when I use Mercurial (which doesn't have a staging area), I don't miss the staging area at all. Instead, I learn that all operations involving the staging area can be modeled as other fundamental primitives (like commit amend) that you are likely to encounter anyway. The staging area therefore constitutes an unnecessary burden and cognitive load on users. While powerful, its complexity and incurred confusion does not justify its existence in the default Git configuration. The staging area is a power-user feature and should be opt-in by default.

Branches and Remotes Management is Complex and Time-Consuming

When I first used Git (coming from CVS and Subversion), I thought branches and remotes were incredible because they enabled new workflows that allowed you to easily track multiple lines of work across many repositories. And ~10 years later, I still believe the workflows they enable are important. However, having amassed a broader perspective, I also believe their implementation is poor and this unnecessarily confuses many users and wastes the time of all users.

My initial zen moment with Git - the time when Git finally clicked for me -

was when I understood Git's object model: that Git is just a

content indexed key-value store consisting of a different object types

(blobs, trees, and commits) that have a particular relationship with

each other. Refs are symbolic names pointing to Git commit objects. And

Git branches - both local and remote - are just refs having a

well-defined naming convention (refs/heads/<name> for local branches and

refs/remotes/<remote>/<name> for remote branches). Even tags and

notes are defined via refs.

Refs are a necessary primitive in Git because the Git storage model is to throw all objects into a single, key-value namespace. Since the store is content indexed and the key name is a cryptographic hash of the object's content (which for all intents and purposes is random gibberish to end-users), the Git store by itself is unable to locate objects. If all you had was the key-value store and you wanted to find all commits, you would need to walk every object in the store and read it to see if it is a commit object. You'd then need to buffer metadata about those objects in memory so you could reassemble them into say a DAG to facilitate looking at commit history. This approach obviously doesn't scale. Refs short-circuit this process by providing pointers to objects of importance. It may help to think of the set of refs as an index into the Git store.

Refs also serve another role: as guards against garbage collection. I won't go into details about loose objects and packfiles, but it's worth noting that Git's key-value store also behaves in ways similar to a generational garbage collector like you would find in programming languages such as Java and Python. The important thing to know is that Git will garbage collect (read: delete) objects that are unused. And the mechanism it uses to determine which objects are unused is to iterate through refs and walk all transitive references from that initial pointer. If there is an object in the store that can't be traced back to a ref, it is unreachable and can be deleted.

Reflogs maintain the history of a value for a ref: for each ref they contain a log of what commit it was pointing to, when that pointer was established, who established it, etc. Reflogs serve two purposes: facilitating undoing a previous action and holding a reference to old data to prevent it from being garbage collected. The two use cases are related: if you don't care about undo, you don't need the old reference to prevent garbage collection.

This design of Git's store is actually quite sensible. It's not perfect (nothing is). But it is a solid foundation to build a version control tool (or even other data storage applications) on top of.

The title of this section has to do with sub-optimal branches and remotes management. But I've hardly said anything about branches or remotes! And this leads me to my main complaint about Git's branches and remotes: that they are very thin veneer over refs. The properties of Git's underlying key-value store unnecessarily bleed into user-facing concepts (like branches and remotes) and therefore dictate sub-optimal practices. This is what's referred to as a leaky abstraction.

I'll give some examples.

As I stated above, many users treat version control as a save file step in their workflow. I believe that any step that interferes with users saving their work is user hostile. This even includes writing a commit message! I already argued that the staging area significantly interferes with this critical task. Git branches do as well.

If we were designing a version control tool from scratch (or if you were

a new user to version control), you would probably think that a sane

feature/requirement would be to update to any revision and start making

changes. In Git speak, this would be something like

git checkout b201e96f, make some file changes, git commit. I think

that's a pretty basic workflow requirement for a version control tool.

And the workflow I suggested is pretty intuitive: choose the thing to

start working on, make some changes, then save those changes.

Let's see what happens when we actually do this:

$ git checkout b201e96f

Note: checking out 'b201e96f'.

You are in 'detached HEAD' state. You can look around, make experimental

changes and commit them, and you can discard any commits you make in this

state without impacting any branches by performing another checkout.

If you want to create a new branch to retain commits you create, you may

do so (now or later) by using -b with the checkout command again. Example:

git checkout -b <new-branch-name>

HEAD is now at b201e96f94... Merge branch 'rs/config-write-section-fix' into maint

$ echo 'my change' >> README.md

$ git commit -a -m 'my change'

[detached HEAD aeb0c997ff] my change

1 file changed, 1 insertion(+)

$ git push indygreg

fatal: You are not currently on a branch.

To push the history leading to the current (detached HEAD)

state now, use

git push indygreg HEAD:<name-of-remote-branch>

$ git checkout master

Warning: you are leaving 1 commit behind, not connected to

any of your branches:

aeb0c997ff my change

If you want to keep it by creating a new branch, this may be a good time

to do so with:

git branch <new-branch-name> aeb0c997ff

Switched to branch 'master'

Your branch is up to date with 'origin/master'.

I know what all these messages mean because I've mastered Git. But if you were a newcomer (or even a seasoned user), you might be very confused. Just so we're on the same page, here is what's happening (along with some commentary).

When I run git checkout b201e96f, Git is trying to tell me that I'm

potentially doing something that could result in the loss of my data. A

golden rule of version control tools is don't lose the user's data. When

I run git checkout, Git should be stating the risk for data loss very

clearly. But instead, the If you want to create a new branch sentence is

hiding this fact by instead phrasing things around retaining commits you

create rather than the possible loss of data. It's up to the user

to make the connection that retaining commits you create actually means

don't eat my data. Preventing data loss is critical and Git should not

mince words here!

The git commit seems to work like normal. However, since we're in a

detached HEAD state (a phrase that is likely gibberish to most users),

that commit isn't referred to by any ref, so it can be lost easily.

Git should be telling me that I just committed something it may not

be able to find in the future. But it doesn't. Again, Git isn't being

as protective of my data as it needs to be.

The failure in the git push command is essentially telling me I need

to give things a name in order to push. Pushing is effectively remote

save. And I'm going to apply my reasoning about version control tools

not interfering with save to pushing as well: Git is adding an

extra barrier to remote save by refusing to push commits without a

branch attached and by doing so is being user hostile.

Finally, we git checkout master to move to another commit. Here, Git

is actually doing something halfway reasonable. It is telling me I'm

leaving commits behind, which commits those are, and the command to

use to keep those commits. The warning is good but not great. I think

it needs to be stronger to reflect the risk around data loss if that

suggested Git commit isn't executed. (Of course, the reflog for HEAD

will ensure that data isn't immediately deleted. But users shouldn't

need to involve reflogs to not lose data that wasn't rewritten.)

The point I want to make is that Git doesn't allow you to just update and save. Because its dumb store requires pointers to relevant commits (refs) and because that requirement isn't abstracted away or paved over by user-friendly features in the frontend, Git is effectively requiring end-users to define names (branches) for all commits. If you fail to define a name, it gets a lot harder to find your commits, exchange them, and Git may delete your data. While it is technically possible to not create branches, the version control tool is essentially unusable without them.

When local branches are exchanged, they appear as remote branches to others. Essentially, you give each instance of the repository a name (the remote). And branches/refs fetched from a named remote appear as a ref in the ref namespace for that remote. e.g. refs/remotes/origin holds refs for the origin remote. (Git allows you to not have to specify the refs/remotes part, so you can refer to e.g. refs/remotes/origin/master as origin/master.)

Again, if you were designing a version control tool from scratch or you

were a new Git user, you'd probably think remote refs would make

good starting points for work. For example, if you know you should be

saving new work on top of the master branch, you might be inclined

to begin that work by running git checkout origin/master. But like

our specific-commit checkout above:

$ git checkout origin/master

Note: checking out 'origin/master'.

You are in 'detached HEAD' state. You can look around, make experimental

changes and commit them, and you can discard any commits you make in this

state without impacting any branches by performing another checkout.

If you want to create a new branch to retain commits you create, you may

do so (now or later) by using -b with the checkout command again. Example:

git checkout -b <new-branch-name>

HEAD is now at 95ec6b1b33... RelNotes: the eighth batch

This is the same message we got for a direct checkout. But we did

supply a ref/remote branch name. What gives? Essentially, Git tries

to enforce that the refs/remotes/ namespace is read-only and only

updated by operations that exchange data with a remote, namely git fetch,

git pull, and git push.

For this to work correctly, you need to create a new local branch

(which initially points to the commit that refs/remotes/origin/master

points to) and then switch/activate that local branch.

I could go on talking about all the subtle nuances of how Git branches are managed. But I won't.

If you've used Git, you know you need to use branches. You may or may

not recognize just how frequently you have to type a branch name into

a git command. I guarantee that if you are familiar with version control

tools and workflows that aren't based on having to manage refs to

track data, you will find Git's forced usage of refs and branches

a bit absurd. I half jokingly refer to Git as Game of Refs. I say that

because coming from Mercurial (which doesn't require you to name things),

Git workflows feel to me like all I'm doing is typing the names of branches

and refs into git commands. I feel like I'm wasting my precious

time telling Git the names of things only because this is necessary to

placate the leaky abstraction of Git's storage layer which requires

references to relevant commits.

Git and version control doesn't have to be this way.

As I said, my Mercurial workflow doesn't rely on naming things. Unlike Git, Mercurial's store has an explicit (not shared) storage location for commits (changesets in Mercurial parlance). And this data structure is ordered, meaning a changeset later always occurs after its parent/predecessor. This means that Mercurial can open a single file/index to quickly find all changesets. Because Mercurial doesn't need pointers to commits of relevance, names aren't required.

My Zen of Mercurial moment came when I realized you didn't have to name things in Mercurial. Having used Git before Mercurial, I was conditioned to always be naming things. This is the Git way after all. And, truth be told, it is common to name things in Mercurial as well. Mercurial's named branches were the way to do feature branches in Mercurial for years. Some used the MQ extension (essentially a port of quilt), which also requires naming individual patches. Git users coming to Mercurial were missing Git branches and Mercurial's bookmarks were a poor port of Git branches.

But recently, more and more Mercurial users have been coming to the realization that names aren't really necessary. If the tool doesn't actually require naming things, why force users to name things? As long as users can find the commits they need to find, do you actually need names?

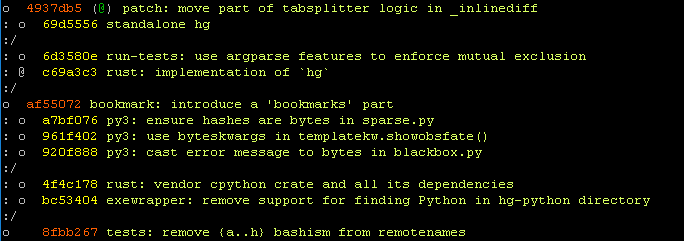

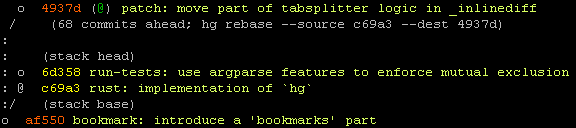

As a demonstration, my Mercurial workflow leans heavily on the hg show work

and hg show stack commands. You will need to enable the show extension

by putting the following in your hgrc config file to use them:

[extensions]

show =

Running hg show work (I have also set the config

commands.show.aliasprefix=sto enable me to type hg swork) finds all

in-progress changesets and other likely-relevant changesets (those

with names and DAG heads). It prints a concise DAG of those changesets:

And hg show stack shows just the current line of work and its

relationship to other important heads:

Aside from the @ bookmark/name set on that top-most changeset, there are

no names! (That @ comes from the remote repository, which has set that name.)

Outside of code archeology workflows, hg show work shows the changesets I

care about 95% of the time. With all I care about (my in-progress work and

possible rebase targets) rendered concisely, I don't have to name things

because I can just find whatever I'm looking for by running hg show work!

Yes, you need to run hg show work, visually scan for what you are looking

for, and copy a (random) hash fragment into a number of commands. This

sounds like a lot of work. But I believe it is far less work than naming

things. Only when you practice this workflow do you realize just how much

time you actually spend finding and then typing names in to hg and -

especailly - git commands! The ability to just hg update to a changeset

and commit without having to name things is just so liberating. It feels

like my version control tool is putting up fewer barriers and letting me

work quickly.

Another benefit of hg show work and hg show stack are that they present

a concise DAG visualization to users. This helps educate users about the

underlying shape of repository data. When you see connected nodes on a

graph and how they change over time, it makes it a lot easier to understand

concepts like merge and rebase.

This nameless workflow may sound radical. But that's because we're all

conditioned to naming things. I initially thought it was crazy as well. But

once you have a mechanism that gives you rapid access to data you care

about (hg show work in Mercurial's case), names become very optional. Now,

a pure nameless workflow isn't without its limitations. You want names

to identify the main targets for work (e.g. the master branch). And when

you exchange work with others, names are easier to work with, especially

since names survive rewriting. But in my experience, most of my commits

are only exchanged with me (synchronizing my in-progress commits across

devices) and with code review tools (which don't really need names and

can operate against raw commits). My most frequent use of names comes

when I'm in repository maintainer mode and I need to ensure commits

have names for others to reference.

Could Git support nameless workflows? In theory it can.

Git needs refs to find relevant commits in its store. And the wire protocol uses refs to exchange data. So refs have to exist for Git to function (assuming Git doesn't radically change its storage and exchange mechanisms to mitigate the need for refs, but that would be a massive change and I don't see this happening).

While there is a fundamental requirement for refs to exist, this doesn't necessarily mean that user-facing names must exist. The reason that we need branches today is because branches are little more than a ref with special behavior. It is theoretically possible to invent a mechanism that transparently maps nameless commits onto refs. For example, you could create a refs/nameless/ namespace that was automatically populated with DAG heads that didn't have names attached. And Git could exchange these refs just like it can branches today. It would be a lot of work to think through all the implications and to design and implement support for nameless development in Git. But I think it is possible.

I encourage the Git community to investigate supporting nameless workflows. Having adopted this workflow in Mercurial, Git's workflow around naming branches feels heavyweight and restrictive to me. Put another way, nameless commits are actually lighter-weight branches than Git branches! To the common user who just wants version control to be a save feature, requiring names establishes a barrier towards that goal. So removing the naming requirement would make Git simpler and more approachable to new users.

Forks aren't the Model You are Looking For

This section is more about hosted Git services (like GitHub, Bitbucket, and GitLab) than Git itself. But since hosted Git services are synonymous with Git and interaction with a hosted Git services is a regular part of a common Git user's workflow, I feel like I need to cover it. (For what it's worth, my experience at Mozilla tells me that a large percentage of people who say I prefer Git or we should use Git actually mean I like GitHub. Git and GitHub/Bitbucket/GitLab are effectively the same thing in the minds of many and anyone finding themselves discussing version control needs to keep this in mind because Git is more than just the command line tool: it is an ecosystem.)

I'll come right out and say it: I think forks are a relatively poor model for collaborating. They are light years better than what existed before. But they are still so far from the turn-key experience that should be possible. The fork hasn't really changed much since the current implementation of it was made popular by GitHub many years ago. And I view this as a general failure of hosted services to innovate.

So we have a shared understanding, a fork (as implemented on GitHub,

Bitbucket, GitLab, etc) is essentially a complete copy of a repository

(a git clone if using Git) and a fresh workspace for additional

value-added services the hosting provider offers (pull requests, issues,

wikis, project tracking, release tracking, etc). If you open the main

web page for a fork on these services, it looks just like the main

project's. You know it is a fork because there are cosmetics somewhere

(typically next to the project/repository name) saying forked from.

Before service providers adopted the fork terminology, fork was used in open source to refer to a splintering of a project. If someone or a group of people didn't like the direction a project was taking, wanted to take over ownership of a project because of stagnation, etc, they would fork it. The fork was based on the original (and there may even be active collaboration between the fork and original), but the intent of the fork was to create distance between the original project and its new incantation. A new entity that was sufficiently independent of the original.

Forks on service providers mostly retain this old school fork model. The fork gets a new copy of issues, wikis, etc. And anyone who forks establishes what looks like an independent incantation of a project. It's worth noting that the execution varies by service provider. For example, GitHub won't enable Issues for a fork by default, thereby encouraging people to file issues against the upstream project it was forked from. (This is good default behavior.)

And I know why service providers (initially) implemented things this

way: it was easy. If you are building a product, it's simpler to just

say a user's version of this project is a git clone and they get

a fresh database. On a technical level, this meets the traditional

definition of fork. And rather than introduce a new term into the

vernacular, they just re-purposed fork (albeit with softer

connotations, since the traditional fork commonly implied there

was some form of strife precipitating a fork).

To help differentiate flavors of forks, I'm going to define the terms soft fork and hard fork. A soft fork is a fork that exists for purposes of collaboration. The differentiating feature between a soft fork and hard fork is whether the fork is intended to be used as its own project. If it is, it is a hard fork. If not - if all changes are intended to be merged into the upstream project and be consumed from there - it is a soft fork.

I don't have concrete numbers, but I'm willing to wager that the vast majority of forks on Git service providers which have changes are soft forks rather than hard forks. In other words, these forks exist purely as a conduit to collaborate with the canonical/upstream project (or to facilitate a short-lived one-off change).

The current implementation of fork - which borrows a lot from its predecessor of the same name - is a good - but not great - way to facilitate collaboration. It isn't great because it technically resembles what you'd expect to see for hard fork use cases even though it is used predominantly with soft forks. This mismatch creates problems.

If you were to take a step back and invent your own version control

hosted service and weren't tainted by exposure to existing services

and were willing to think a bit beyond making it a glorified frontend

for the git command line interface, you might realize that the problem

you are solving - the product you are selling - is collaboration as

a service, not a Git hosting service. And if your product is

collaboration, then implementing your collaboration model around the

hard fork model with strong barriers between the original project and

its forks is counterproductive and undermines your own product.

But this is how GitHub, Bitbucket, GitLab, and others have implemented

their product!

To improve collaboration on version control hosted services, the concept of a fork needs to significantly curtailed. Replacing it should be a UI and workflow that revolves around the central, canonical repository.

You shouldn't need to create your own clone or fork of a repository in order to contribute. Instead, you should be able to clone the canonical repository. When you create commits, those commits should be stored and/or more tightly affiliated with the original project - not inside a fork.

One potential implementation is doable today. I'm going to call it workspaces. Here's how it would work.

There would exist a namespace for refs that can be controlled by

the user. For example, on GitHub (where my username is indygreg),

if I wanted to contribute to some random project, I would git push

my refs somewhere under refs/users/indygreg/ directly to that

project's. No forking necessary. If I wanted to contribute to a

project, I would just clone its repo then push to my workspace under

it. You could do this today by configuring your Git refspec properly.

For pushes, it would look something like

refs/heads/*:refs/users/indygreg/* (that tells Git to map local refs

under refs/heads/ to refs/users/indygreg/ on that remote repository).

If this became a popular feature, presumably the Git wire protocol could

be taught to advertise this feature such that Git clients automatically

configured themselves to push to user-specific workspaces attached to

the original repository.

There are several advantages to such a workspace model. Many of them revolve around eliminating forks.

At initial contribution time, no server-side fork is necessary in order to contribute. You would be able to clone and contribute without waiting for or configuring a fork. Or if you can create commits from the web interface, the clone wouldn't even be necessary! Lowering the barrier to contribution is a good thing, especially if collaboration is the product you are selling.

In the web UI, workspaces would also revolve around the source project and not be off in their own world like forks are today. People could more easily see what others are up to. And fetching their work would require typing in their username as opposed to configuring a whole new remote. This would bring communities closer and hopefully lead to better collaboration.

Not requiring forks also eliminates the need to synchronize your fork

with the upstream repository. I don't know about you, but one of the things

that bothers me about the Game of Refs that Git imposes is that I have

to keep my refs in sync with the upstream refs. When I fetch from

origin and pull down a new master branch, I need to git merge

that branch into my local master branch. Then I need to push that new

master branch to my fork. This is quite tedious. And it is easy to merge

the wrong branches and get your branch state out of whack. There are

better ways to map remote refs into your local names to make this far

less confusing.

Another win here is not having to push and store data multiple times.

When working on a fork (which is a separate repository), after you

git fetch changes from upstream, you need to eventually git push those

into your fork. If you've ever worked on a large repository and didn't

have a super fast Internet connection, you may have been stymied by

having to git push large amounts of data to your fork. This is quite

annoying, especially for people with slow Internet connections. Wouldn't

it be nice if that git push only pushed the data that was truly new and

didn't already exist somewhere else on the server? A workspace model

where development all occurs in the original repository would fix this.

As a bonus, it would make the storage problem on servers easier because

you would eliminate thousands of forks and you probably wouldn't have to

care as much about data duplication across repos/clones because the

version control tool solves a lot of this problem for you, courtesy of

having all data live alongside or in the original repository instead of

in a fork.

Another win from workspace-centric development would be the potential to do more user-friendly things after pull/merge requests are incorporated in the official project. For example, the ref in your workspace could be deleted automatically. This would ease the burden on users to clean up after their submissions are accepted. Again, instead of mashing keys to play the Game of Refs, this would all be taken care of for you automatically. (Yes, I know there are scripts and shell aliases to make this more turn-key. But user-friendly behavior shouldn't have to be opt-in: it should be the default.)

But workspaces aren't all rainbows and unicorns. There are access control concerns. You probably don't want users able to mutate the workspaces of other users. Or do you? You can make a compelling case that project administrators should have that ability. And what if someone pushes bad or illegal content to a workspace and you receive a cease and desist? Can you take down just the offending workspace while complying with the order? And what happens if the original project is deleted? Do all its workspaces die with it? These are not trivial concerns. But they don't feel impossible to tackle either.

Workspaces are only one potential alternative to forks. And I can come up with multiple implementations of the workspace concept. Although many of them are constrained by current features in the Git wire protocol. But Git is (finally) getting a more extensible wire protocol, so hopefully this will enable nice things.

I challenge Git service providers like GitHub, Bitbucket, and GitLab to think outside the box and implement something better than how forks are implemented today. It will be a large shift. But I think users will appreciate it in the long run.

Conclusion

Git is an ubiquitous version control tool. But it is frequently lampooned for its poor usability and documentation. We even have research papers telling us which parts are bad. Nobody I know has had a pleasant initial experience with Git. And it is clear that few people actually understand Git: most just know the command incantations they need to know to accomplish a small set of common activities. (If you are such a person, there is nothing to be ashamed about: Git is a hard tool.)

Popular Git-based hosting and collaboration services (such as GitHub,

Bitbucket, and GitLab) exist. While they've made strides to make it

easier to commit data to a Git repository (I purposefully avoid saying

use Git because the most usable tools seem to avoid the git command

line interface as much as possible), they are often a thin veneer over

Git itself (see forks). And Git is a thin veneer over a content

indexed key-value store (see forced usage of bookmarks).

As an industry, we should be concerned about the lousy usability of Git and the tools and services that surround it. Some may say that Git - with its near monopoly over version control mindset - is a success. I have a different view: I think it is a failure that a tool with a user experience this bad has achieved the success it has.

The cost to Git's poor usability can be measured in tens if not hundreds of millions of dollars in time people have wasted because they couldn't figure out how to use Git. Git should be viewed as a source of embarrassment, not a success story.

What's really concerning is that the usability problems of Git have been known for years. Yet it is as popular as ever and there have been few substantial usability improvements. We do have some alternative frontends floating around. But these haven't caught on.

I'm at a loss to understand how an open source tool as popular as Git has remained so mediocre for so long. The source code is out there. Anybody can submit a patch to fix it. Why is it that so many people get tripped up by the same poor usability issues years after Git became the common version control tool? It certainly appears that as an industry we have been unable or unwilling to address systemic deficiencies in a critical tool. Why this is, I'm not sure.

Despite my pessimism about Git's usability and its poor track record of being attentive to the needs of people who aren't power users, I'm optimistic that the future will be brighter. While the ~7000 words in this post pale in comparison to the aggregate word count that has been written about Git, hopefully this post strikes a nerve and causes positive change. Just because one generation has toiled with the usability problems of Git doesn't mean the next generation has to suffer through the same. Git can be improved and I encourage that change to happen. The three issues above and their possible solutions would be a good place to start.

from __past__ import bytes_literals

March 13, 2017 at 09:55 AM | categories: Python, Mercurial, MozillaLast year, I simultaneously committed one of the ugliest and impressive hacks of my programming career. I haven't had time to write about it. Until now.

In summary, the hack is a

source-transforming module loader

for Python. It can be used by Python 3 to import a Python 2 source

file while translating certain primitives to their Python 3 equivalents.

It is kind of like 2to3

except it executes at run-time during import. The main goal of the

hack was to facilitate porting Mercurial to Python 3 while deferring

having to make the most invasive - and therefore most annoying -

elements of the port in the canonical source code representation.

For the technically curious, it works as follows.

The hg Python executable registers a custom

meta path finder

instance. This entity is invoked during import statements to try

to find the module being imported. It tells a later phase of the

import mechanism how to load that module from wherever it is

(usually a .py or .pyc file on disk) to a Python module object.

The custom finder only responds to requests for modules known

to be managed by the Mercurial project. For these modules, it tells

the next stage of the import mechanism to invoke a custom

SourceLoader

instance. Here's where the real magic is: when the custom loader

is invoked, it tokenizes the Python source code using the

tokenize module,

iterates over the token stream, finds specific patterns, and

rewrites them to something more appropriate. It then untokenizes

back to Python source code then falls back to the built-in loader

which does the heavy lifting of compiling the source to Python code

objects. So, we have Python 2 source files on disk that magically get

transformed to be Python compatible when they are loaded by Python 3.

Oh, and there is no performance penalty for the token transformation

on subsequence loads because the transformed bytecode is cached in

the .pyc file (using a custom header so we know it was transformed

and can be invalidated when the transformation logic changes).

At the time I wrote it, the token stream manipulation converted most

string literals ('') to bytes literals (b''). In other words, it

restored the Python 2 behavior of string literals being bytes and

not unicode. We jokingly call it

from __past__ import bytes_literals (a play on Python 2's

from __future__ import unicode_literals special syntax which

changes string literals from Python 2's str/bytes type to

unicode to match Python 3's behavior).

Since I implemented the first version, others have implemented:

- Automatically inserting

a

from mercurial.pycompat import ...statement to the top of the source. This statement is the Mercurial equivalent of importing common wrapper types similar to what six provides. - More robust function argument parsing support. (Because going from a token stream to a higher-level primitive like a function call is difficult.)

- Automatically rewriting

.iteritems()to.items().

As one can expect, when I tweeted a link to this commit, many Python developers (including a few CPython core developers) expressed a mix of intrigue and horror. But mostly horror.

I fully concede that what I did here is a gross hack. And, it is the intention of the Mercurial project to undo this hack and perform a proper port once Python 3 support in Mercurial is more mature. But, I want to lay out my defense on why I did this and why the Mercurial project is tolerant of this ugly hack.

Individuals within the Mercurial project have wanted to port to Python

3 for years. Until recently, it hasn't been a project priority

because a port was too much work for too little end-user gain. And, on

the technical front, a port was just not practical until Python 3.5.

(Two main blockers were no u'' literals - restored in Python 3.3 -

and no % formatting for b'' literals - restored in 3.5. And as I

understand it, senior members of the Mercurial project had to lobby

Python maintainers pretty hard to get features like % formatting of

b'' literals restored to Python 3.)

Anyway, after a number of failed attempts to initiate the Python 3 port over the years, the Mercurial project started making some positive steps towards Python 3 compatibility, such as switching to absolute imports and addressing syntax issues that allowed modules to be parsed into an AST and even compiled and loadable. These may seem like small steps, but for a larger project, it was a lot of work.

The porting effort hit a large wall when it came time to actually make the AST-valid Python code run on Python 3. Specifically, we had a strings problem.

When you write software that exchanges data between machines - sometimes machines running different operating systems or having different encodings - and there is an expectation that things work the same and data roundtrips accordingly, trying to force text encodings is essentially impossible and inevitably breaks something or someone. It is much easier for Mercurial to operate bytes first and only take text encoding into consideration when absolutely necessary (such as when emitting bytes to the terminal in the wanted encoding or when emitting JSON). That's not to say Mercurial ignores the existence of encodings. Far from it: Mercurial does attempt to normalize some data to Unicode. But it often does so with a special Python type that internally stores the raw byte sequence of the source so that a consumer can choose to operate at the bytes or Unicode level.

Anyway, this means that practically every string variable in Mercurial

is a bytes type (or something that acts like a bytes type). And

since string literals in Python 3 are the str type (which represents

Unicode), that would mean having to prefix almost every '' string

literal in Mercurial with b'' in order to placate Python 3. Having

to update every occurrence of simple primitives that could be statically

transformed automatically felt like busy work. We wanted to spend time

on the meaningful parts of the Python 3 port so we could find

interesting problems and challenges, not toil with mechanical

conversions that add little to no short-term value while simultaneously

increasing cognitive dissonance and quite possibly increasing the odds

of introducing a bug in Python 2. In other words, why should humans

do the work that machines can do for us? Thus, the source-transforming

module importer was born.

While I concede what Mercurial did is a giant hack, I maintain it was the correct thing to do. It has allowed the Python 3 port to move forward without being blocked on the more tedious and invasive transformations that could introduce subtle bugs (including performance regressions) in Python 2. Perfect is the enemy of good. People time is valuable. The source-transforming module importer allowed us to unblock an important project without sinking a lot of people time into it. I'd make that trade-off again.

While I won't encourage others to take this approach to porting to Python 3, if you want to, Mercurial's source is available under a GPL license and the custom module importer could be adapted to any project with minimal modifications. If someone does extract it as reusable code, please leave a comment and I'll update the post to link to it.

Better Compression with Zstandard

March 07, 2017 at 09:55 AM | categories: Python, Mercurial, MozillaI think I first heard about the Zstandard compression algorithm at a Mercurial developer sprint in 2015. At one end of a large table a few people were uttering expletives out of sheer excitement. At developer gatherings, that's the universal signal for something is awesome. Long story short, a Facebook engineer shared a link to the RealTime Data Compression blog operated by Yann Collet (then known as the author of LZ4 - a compression algorithm known for its insane speeds) and people were completely nerding out over the excellent articles and the data within showing the beginnings of a new general purpose lossless compression algorithm named Zstandard. It promised better-than-deflate/zlib compression ratios and performance on both compression and decompression. This being a Mercurial meeting, many of us were intrigued because zlib is used by Mercurial for various functionality (including on-disk storage and compression over the wire protocol) and zlib operations frequently appear as performance hot spots.

Before I continue, if you are interested in low-level performance and software optimization, I highly recommend perusing the RealTime Data Compression blog. There are some absolute nuggets of info in there.

Anyway, over the months, the news about Zstandard (zstd) kept getting better and more promising. As the 1.0 release neared, the Facebook engineers I interact with (Yann Collet - Zstandard's author - is now employed by Facebook) were absolutely ecstatic about Zstandard and its potential. I was toying around with pre-release versions and was absolutely blown away by the performance and features. I believed the hype.

Zstandard 1.0 was released on August 31, 2016. A few days later, I started the python-zstandard project to provide a fully-featured and Pythonic interface to the underlying zstd C API while not sacrificing safety or performance. The ulterior motive was to leverage those bindings in Mercurial so Zstandard could be a first class citizen in Mercurial, possibly replacing zlib as the default compression algorithm for all operations.

Fast forward six months and I've achieved many of those goals. python-zstandard has a nearly complete interface to the zstd C API. It even exposes some primitives not in the C API, such as batch compression operations that leverage multiple threads and use minimal memory allocations to facilitate insanely fast execution. (Expect a dedicated post on python-zstandard from me soon.)

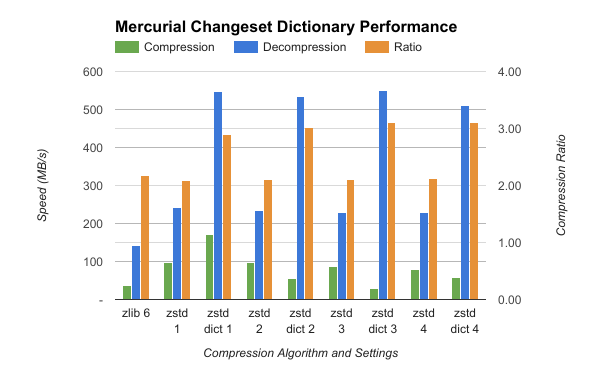

Mercurial 4.1 ships with the python-zstandard bindings. Two Mercurial 4.1 peers talking to each other will exchange Zstandard compressed data instead of zlib. For a Firefox repository clone, transfer size is reduced from ~1184 MB (zlib level 6) to ~1052 MB (zstd level 3) in the default Mercurial configuration while using ~60% of the CPU that zlib required on the compressor end. When cloning from hg.mozilla.org, the pre-generated zstd clone bundle hosted on a CDN using maximum compression is ~707 MB - ~60% the size of zlib! And, work is ongoing for Mercurial to support Zstandard for on-disk storage, which should bring considerable performance wins over zlib for local operations.

I've learned a lot working on python-zstandard and integrating Zstandard into Mercurial. My primary takeaway is Zstandard is awesome.

In this post, I'm going to extol the virtues of Zstandard and provide reasons why I think you should use it.

Why Zstandard

The main objective of lossless compression is to spend one resource (CPU) so that you may reduce another (I/O). This trade-off is usually made because data - either at rest in storage or in motion over a network or even through a machine via software and memory - is a limiting factor for performance. So if compression is needed for your use case to mitigate I/O being the limiting resource and you can swap in a different compression algorithm that magically reduces both CPU and I/O requirements, that's pretty exciting. At scale, better and more efficient compression can translate to substantial cost savings in infrastructure. It can also lead to improved application performance, translating to better end-user engagement, sales, productivity, etc. This is why companies like Facebook (Zstandard), Google (brotli, snappy, zopfli), and Pied Piper (middle-out) invest in compression.

Today, the most widely used compression algorithm in the world is likely DEFLATE. And, software most often interacts with DEFLATE via what is likely the most widely used software library in the world, zlib.

Being at least 27 years old, DEFLATE is getting a bit long in the tooth. Computers are completely different today than they were in 1990. The Pentium microprocessor debuted in 1993. If memory serves (pun intended), it used PC66 DRAM, which had a transfer rate of 533 MB/s. For comparison, a modern NVMe M.2 SSD (like the Samsung 960 PRO) can read at 3000+ MB/s and write at 2000+ MB/s. In other words, persistent storage today is faster than the RAM from the era when DEFLATE was invented. And of course CPU and network speeds have increased as well. We also have completely different instruction sets on CPUs for well-designed algorithms and software to take advantage of. What I'm trying to say is the market is ripe for DEFLATE and zlib to be dethroned by algorithms and software that take into account the realities of modern computers.

(For the remainder of this post I'll use zlib as a stand-in for DEFLATE because it is simpler.)

Zstandard initially piqued my attention by promising better-than-zlib compression and performance in both the compression and decompression directions. That's impressive. But it isn't unique. Brotli achieves the same, for example. But what kept my attention was Zstandard's rich feature set, tuning abilities, and therefore versatility.

In the sections below, I'll describe some of the benefits of Zstandard in more detail.

Before I do, I need to throw in an obligatory disclaimer about data and numbers that I use. Benchmarking is hard. Benchmarks should not be trusted. There are so many variables that can influence performance and benchmarks. (A recent example that surprised me is the CPU frequency/power ramping properties of Xeon versus non-Xeon Intel CPUs. tl;dr a Xeon won't hit max CPU frequency if only a core or two is busy, meaning that any single or low-threaded benchmark is likely misleading on Xeons unless you change power settings to mitigate its conservative power ramping defaults. And if you change power settings, does that reflect real-life usage?)

Reporting useful and accurate performance numbers for compression is hard because there are so many variables to care about. For example:

- Every corpus is different. Text, JSON, C++, photos, numerical data, etc all exhibit different properties when fed into compression and could cause compression ratios or speeds to vary significantly.

- Few large inputs versus many smaller inputs (some algorithms work better on large inputs; some libraries have high per-operation overhead).

- Memory allocation and use strategy. Performance can vary significantly depending on how a compression library allocates, manages, and uses memory. This can be an implementation specific detail as opposed to a core property of the compression algorithm.

Since Mercurial is the driver for my work in Zstandard, the data and

numbers I report in this post are mostly Mercurial data. Specifically,

I'll be referring to data in the

mozilla-unified Firefox repository.

This repository contains over 300,000 commits spanning almost 10 years.

The data within is a good mix of text (mostly C++, JavaScript, Python,

HTML, and CSS source code and other free-form text) and binary (like

PNGs). The Mercurial layer adds some binary structures to e.g. represent

metadata for deltas, diffs, and patching. There are two Mercurial-specific

pieces of data I will use. One is a Mercurial bundle. This is essentially

a representation of all data in a repository. It stores a mix of raw,

fulltext data and deltas on that data. For the mozilla-unified repo, an

uncompressed bundle (produced via hg bundle -t none-v2 -a) is ~4457 MB.

The other piece of data is revlog chunks. This is a mix of fulltext

and delta data for a specific item tracked in version control. I

frequently use the changelog corpus, which is the fulltext data

describing changesets or commits to Firefox. The numbers quoted and

used for charts in this post

are available in a Google Sheet.

All performance data was obtained on an i7-6700K running Ubuntu 16.10 (Linux 4.8.0) with a mostly stock config. Benchmarks were performed in memory to mitigate storage I/O or filesystem interference. Memory used is DDR4-2133 with a cycle time of 35 clocks.

While I'm pretty positive about Zstandard, it isn't perfect. There are corpora for which Zstandard performs worse than other algorithms, even ones I compare it directly to in this post. So, your mileage may vary. Please enlighten me with your counterexamples by leaving a comment.

With that (rather large) disclaimer out of the way, let's talk about what makes Zstandard awesome.

Flexibility for Speed Versus Size Trade-offs

Compression algorithms typically contain parameters to control how much work to do. You can choose to spend more CPU to (hopefully) achieve better compression or you can spend less CPU to sacrifice compression. (OK, fine, there are other factors like memory usage at play too. I'm simplifying.) This is commonly exposed to end-users as a compression level. (In reality there are often multiple parameters that can be tuned. But I'll just use level as a stand-in to represent the concept.)

But even with adjustable compression levels, the performance of many compression algorithms and libraries tend to fall within a relatively narrow window. In other words, many compression algorithms focus on niche markets. For example, LZ4 is super fast but doesn't yield great compression ratios. LZMA yields terrific compression ratios but is extremely slow.

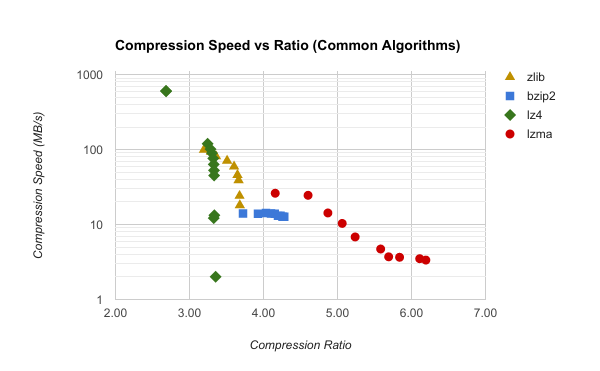

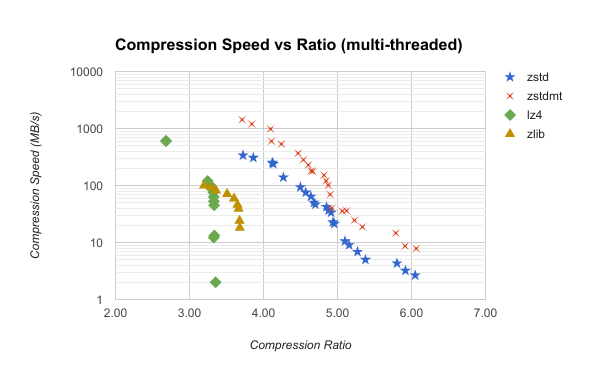

This can be visualized in the following chart showing results when compressing a mozilla-unified Mercurial bundle:

This chart plots the logarithmic compression speed in megabytes per second against achieved compression ratio. The further right a data point is, the better the compression and the smaller the output. The higher up a point is, the faster compression is.

The ideal compression algorithm lives in the top right, which means it compresses well and is fast. But the powers of mathematics push compression algorithms away from the top right.

On to the observations.

LZ4 is highly vertical, which means its compression ratios are limited in variance but it is extremely flexible in speed. So for this data, you might as well stick to a lower compression level because higher values don't buy you much.

Bzip2 is the opposite: a horizontal line. That means it is consistently the same speed while yielding different compression ratios. In other words, you might as well crank bzip2 up to maximum compression because it doesn't have a significant adverse impact on speed.

LZMA and zlib are more interesting because they exhibit more variance in both the compression ratio and speed dimensions. But let's be frank, they are still pretty narrow. LZMA looks pretty good from a shape perspective, but its top speed is just too slow - only ~26 MB/s!

This small window of flexibility means that you often have to choose a compression algorithm based on the speed versus size trade-off you are willing to make at that time. That choice often gets baked into software. And as time passes and your software or data gains popularity, changing the software to swap in or support a new compression algorithm becomes harder because of the cost and disruption it will cause. That's technical debt.

What we really want is a single compression algorithm that occupies lots of space in both dimensions of our chart - a curve that has high variance in both compression speed and ratio. Such an algorithm would allow you to make an easy decision choosing a compression algorithm without locking you into a narrow behavior profile. It would allow you make a completely different size versus speed trade-off in the future by only adjusting a config knob or two in your application - no swapping of compression algorithms needed!

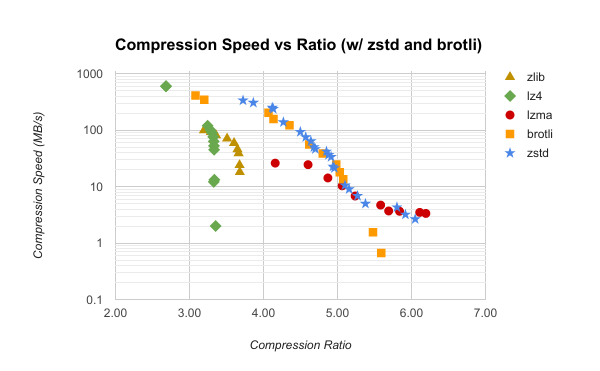

As you can guess, Zstandard fulfills this role. This can clearly be seen in the following chart (which also adds brotli for comparison).

The advantages of Zstandard (and brotli) are obvious. Zstandard's compression speeds go from ~338 MB/s at level 1 to ~2.6 MB/s at level 22 while covering compression ratios from 3.72 to 6.05. On one end, zstd level 1 is ~3.4x faster than zlib level 1 while achieving better compression than zlib level 9! That fastest speed is only 2x slower than LZ4 level 1. On the other end of the spectrum, zstd level 22 runs ~1 MB/s slower than LZMA at level 9 and produces a file that is only 2.3% larger.

It's worth noting that zstd's C API exposes several knobs for tweaking the compression algorithm. Each compression level maps to a pre-defined set of values for these knobs. It is possible to set these values beyond the ranges exposed by the default compression levels 1 through 22. I've done some basic experimentation with this and have made compression even faster (while sacrificing ratio, of course). This covers the gap between Zstandard and brotli on this end of the tuning curve.

The wide span of compression speeds and ratios is a game changer for compression. Unless you have special requirements such as lightning fast operations (which LZ4 can provide) or special corpora that Zstandard can't handle well, Zstandard is a very safe and flexible choice for general purpose compression.

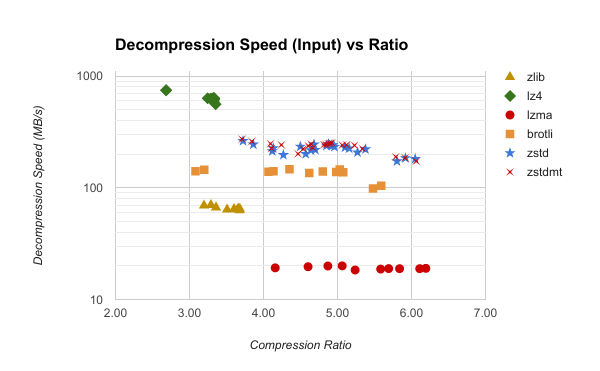

Multi-threaded Compression

Zstd 1.1.3 contains a multi-threaded compression API that allows a compression operation to leverage multiple threads. The output from this API is compatible with the Zstandard frame format and doesn't require any special handling on the decompression side. In other words, a compressor can switch to the multi-threaded API and decompressors won't care.

This is a big deal for a few reasons. First, today's advancements in computer processors tend to yield more capacity from more cores not from faster clocks and better cycle efficiency (although many cases do benefit greatly from modern instruction sets like AVX and therefore better cycle efficiency). Second, so many compression libraries are only single-threaded and require consumers to invent their own framing formats or storage models to facilitate multi-threading. (See Blosc for such a library.) Lack of a multi-threaded API in the compression library means trusting another piece of software or writing your own multi-threaded code.

The following chart adds a plot of Zstandard multi-threaded compression with 4 threads.

The existing curve for Zstandard basically shifted straight up. Nice!

The ~338 MB/s speed for single-threaded compression on zstd level 1 increases to ~1,376 MB/s with 4 threads. That's ~4.06x faster. And, it is ~2.26x faster than the previous fastest entry, LZ4 at level 1! The output size only increased by ~4 MB or ~0.3% over single-threaded compression.

The scaling properties for multi-threaded compression on this input are terrific: all 4 cores are saturated and the output size barely changed.

Because Zstandard's multi-threaded compression API produces data compatible with any Zstandard decompressor, it can logically be considered an extension of compression levels. This means that the already extremely flexible speed vs ratio curve becomes even wider in the speed axis. Zstandard was already a justifiable choice with its extreme versatility. But when you throw in native multi-threaded compression API support, the flexibility for tuning compression performance is just absurd. With enough cores, you are likely to run into I/O limits long before you exhaust the CPU, at which point you can crank up the compression level and sacrifice as much CPU as you are willing to burn. That's a good position to be in.

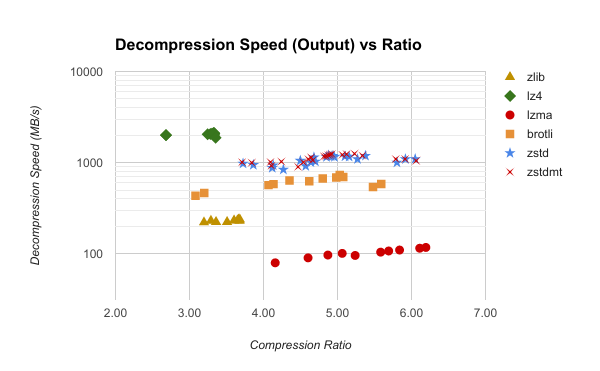

Decompression Speed

Compression speed and ratios only tell half the story about a compression algorithm. Except for archiving scenarios where you write once and read rarely, you probably care about decompression performance.

Popular compression algorithms like zlib and bzip2 have less than stellar decompression speeds. On my i7-6700K, zlib decompression can deliver many decompressed data sets at the output end at 200+ MB/s. However, on the input/compressed end, it frequently fails to reach 100 MB/s or even 80 MB/s. This is significant because if your application is reading data over a 1 Gbps network or from a local disk (modern SSDs can read at several hundred MB/s or more), then your application has a CPU bottleneck at decoding the data - and that's before you actually do anything useful with the data in the application layer! (Remember: the idea behind compression is to spend CPU to mitigate an I/O bottleneck. So if compression makes you CPU bound, you've undermined the point of compression!) And if my Skylake CPU running at 4.0 GHz is CPU - not I/O - bound, A Xeon in a data center will be even slower and even more CPU bound (Xeons tend to run at much lower clock speeds - the laws of thermodynamics require that in order to run more cores in the package). In short, if you are using zlib for high throughput scenarios, there's a good chance it is a bottleneck and slowing down your application.

We again measure the speed of algorithms using a Firefox Mercurial bundle. The following charts plot decompression speed versus ratio for this file. The first chart measures decompression speed on the input end of the decompressor. The second measures speed at the output end.

Zstandard matches its great compression speed with great decompression speed. Zstandard can deliver decompressed output at 1000+ MB/s while consuming input at 200-275MB/s. Furthermore, decompression speed is mostly independent of the compression level. (Although higher compression levels require more memory in the decompressor.) So, if you want to throw more CPU at re-compression later so data at rest takes less space, you can do that without sacrificing read performance. I haven't done the math, but there is probably a break-even point where having dedicated machines re-compress terabytes or petabytes of data at rest offsets the costs of those machine through reduced storage costs.

While Zstandard is not as fast decompressing as LZ4 (which can consume compressed input at 500+ MB/s), its performance is often ~4x faster than zlib. On many CPUs, this puts it well above 1 Gbps, which is often desirable to avoid a bottleneck at the network layer.

It's also worth noting that while Zstandard and brotli were comparable on the compression half of this data, Zstandard has a clear advantage doing decompression.

Finally, you don't appear to pay a price for multi-threaded Zstandard

compression on the decompression side (zstdmt in the chart).

Dictionary Support

The examples so far in this post have used a single 4,457 MB piece of input data to measure behavior. Large data can behave completely differently from small data. This is because so much of what compression algorithms do is find patterns that came before so incoming data can be referenced to old data instead of uniquely stored. And if data is small, there isn't much of it that came before to reference!

This is often why many small, independent chunks of input compress poorly compared to a single large chunk. This can be demonstrated by comparing the widely-used zip and tar archive formats. On the surface, both do the same thing: they are a container of files. But they employ compression at different phases. A zip file will zlib compress each entry independently. However, a tar file doesn't use compression internally. Instead, the tar file itself is fed into a compression algorithm and compressed as a whole.

We can observe the difference on real world data. Firefox

ships with a file named omni.ja. Despite the weird extension, this

is a zip file. The file contains most of the assets for non-compiled

code used by Firefox. This includes the JavaScript, HTML, CSS, and

images that power many parts of the Firefox frontend. The file weighs

in at 9,783,749 bytes for the 64-bit Windows Firefox Nightly from

2017-03-06. (Or 9,965,793 bytes when using zip -9 - the code for

generating omni.ja is smarter than zip and creates smaller

files.) But a zlib level 9 compressed tar.gz file of that directory

is 8,627,155 bytes. That 1,156KB / 13% size difference is significant

when you are talking about delivering bits to end users! (In this

case, the content within the archive needs to be individually

addressable to facilitate fast access to any item without having

to decompress the entire archive: this matters for performance.)

A more extreme example of the differences between zip and tar is the files in the Firefox source checkout. On revision a08ec245fa24 of the Firefox Mercurial repository, a zip file of all files in version control is 430,446,549 bytes versus 322,916,403 bytes for a tar.gz file (1,177,430,383 bytes uncompressed spanning 180,912 files). Using Zstandard, compressing each file discretely at compression level 3 yields 391,387,299 bytes of compressed data versus 294,926,418 as a single stream (without the tar container). Same compression algorithm. Different application method. Drastically different results. That's the impact of input size on compression performance.

While the compression ratio and speed of a single large stream is

often better than multiple smaller chunks, there are still